Workflow Overview¶

This page illustrates the objectives of torc with an example workflow.

The workflow has four jobs:

preprocess: Reads an input file and produces two output files.work1: Performs work on one output file from stage 1.work2: Performs work on one output file from stage 1.postprocess: Reads the output files from the work stage and produces final results.

Dependencies¶

The work scripts can only be run after the preprocess stage and can be run in parallel. The postprocess stage can only be run when the work scripts are done.

These dependencies can be defined indirectly through job-file relationships or directly by the user.

Job-File Relationships¶

![digraph job_file_graph {

"preprocess" -> "inputs.json" [label="needs"];

"preprocess" -> "f1.json" [label="produces"];

"preprocess" -> "f2.json" [label="produces"];

"work1" -> "f1.json" [label="needs"];

"work2" -> "f2.json" [label="needs"];

"work1" -> "f3.json" [label="produces"];

"work2" -> "f4.json" [label="produces"];

"postprocess" -> "f3.json" [label="needs"];

"postprocess" -> "f4.json" [label="needs"];

"postprocess" -> "f5.json" [label="produces"];

}](_images/graphviz-ff42f9a4dc5fb412ed91c23d5a97894bcffdbf41.png)

Job-Job Relationships¶

![digraph job_job_graph {

"preprocess" -> "work1" [label="blocks"];

"preprocess" -> "work2" [label="blocks"];

"work1" -> "postprocess" [label="blocks"];

"work2" -> "postprocess" [label="blocks"];

}](_images/graphviz-190fbdbf5ce1c4ddae208401d3a0c51453ca951c.png)

Resource Requirements¶

The work scripts require much more compute and time than the other stages.

![digraph job_job_graph {

"preprocess" -> "small (1 CPU, 10 minutes)" [label="requires"];

"work1" -> "large (18 CPUs, 24 hours)" [label="requires"];

"work2" -> "large (18 CPUs, 24 hours)" [label="requires"];

"postprocess" -> "medium (4 CPUs, 1 hour)" [label="requires"];

}](_images/graphviz-ff26ef462049e9503cb91db91431a886df52ad1c.png)

Compute Node Efficiency¶

Run jobs in parallel on a single compute node if the requirements allow for it.

Intelligent Restarts¶

The orchestrator can rerun jobs as needed.

If the user finds a bug in the inputs file, all jobs must be rerun. rerun.

If

work2used more memory than expected and failed, onlywork1andpostprocessneed to be rerun.If

postprocesstook more time than expected and timed out, only it needs to be rerun.

Resource Utilization Metrics¶

Compute nodes record actual CPU/GPU/memory utilization statistics.

User-Defined Data¶

Jobs can store user-defined input or output data.

![digraph user_data_graph {

"postprocess" -> "{key1: 'value1', key1: 'value2'}" [label="stores"];

}](_images/graphviz-db4355ea50ad6c7267673fda3a5405e1f261a41e.png)

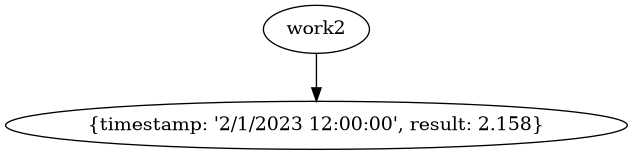

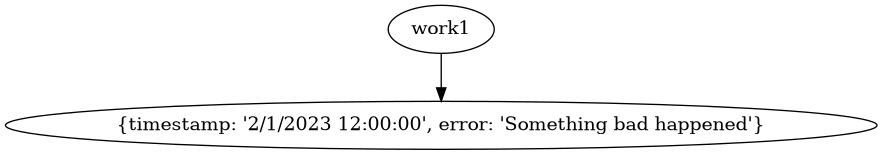

User-Defined Events¶

Jobs can post events with structured data to aid analysis and debug.

Or store result data.